RDD Operations

transformations_actions.xlsx

transformations_actions.xlsx

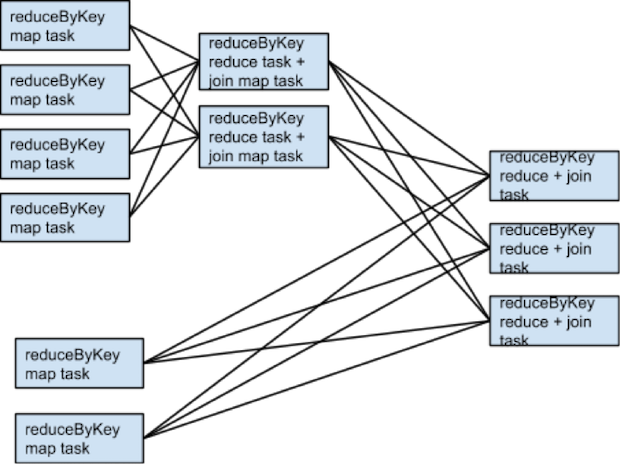

RDDs support two types of operations: transformations, which create a new dataset from an existing one, and actions, which return a value to the driver program after running a computation on the dataset. For example, map is a transformation that passes each dataset element through a function and returns a new RDD representing the results. On the other hand, reduce is an action that aggregates all the elements of the RDD using some function and returns the final result to the driver program (although there is also a parallel reduceByKey that returns a distributed dataset).

All transformations in Spark are lazy, in that they do not compute their results right away. Instead, they just remember the transformations applied to some base dataset (e.g. a file). The transformations are only computed when an action requires a result to be returned to the driver program. This design enables Spark to run more efficiently. For example, we can realize that a dataset created through map will be used in a reduce and return only the result of the reduce to the driver, rather than the larger mapped dataset.

By default, each transformed RDD may be recomputed each time you run an action on it. However, you may also persist an RDD in memory using the persist (or cache) method, in which case Spark will keep the elements around on the cluster for much faster access the next time you query it. There is also support for persisting RDDs on disk, or replicated across multiple nodes.

Transformations

The following table lists some of the common transformations supported by Spark. Refer to the RDD API doc (Scala, Java, Python, R) and pair RDD functions doc (Scala, Java) for details.

Transformation | Meaning |

|---|---|

| map(func) | Return a new distributed dataset formed by passing each element of the source through a function func. |

| filter(func) | Return a new dataset formed by selecting those elements of the source on which funcreturns true. |

| flatMap(func) | Similar to map, but each input item can be mapped to 0 or more output items (so func should return a Seq rather than a single item). |

| mapPartitions(func) | Similar to map, but runs separately on each partition (block) of the RDD, so func must be of type Iterator<T> => Iterator<U> when running on an RDD of type T. |

| mapPartitionsWithIndex(func) | Similar to mapPartitions, but also provides func with an integer value representing the index of the partition, so func must be of type (Int, Iterator<T>) => Iterator<U> when running on an RDD of type T. |

| sample(withReplacement, fraction, seed) | Sample a fraction fraction of the data, with or without replacement, using a given random number generator seed. |

| union(otherDataset) | Return a new dataset that contains the union of the elements in the source dataset and the argument. |

| intersection(otherDataset) | Return a new RDD that contains the intersection of elements in the source dataset and the argument. |

| distinct([numTasks])) | Return a new dataset that contains the distinct elements of the source dataset. |

| groupByKey([numTasks]) | When called on a dataset of (K, V) pairs, returns a dataset of (K, Iterable<V>) pairs. Note: If you are grouping in order to perform an aggregation (such as a sum or average) over each key, using reduceByKey or aggregateByKey will yield much better performance. Note: By default, the level of parallelism in the output depends on the number of partitions of the parent RDD. You can pass an optional numTasks argument to set a different number of tasks. |

| reduceByKey(func, [numTasks]) | When called on a dataset of (K, V) pairs, returns a dataset of (K, V) pairs where the values for each key are aggregated using the given reduce function func, which must be of type (V,V) => V. Like in groupByKey, the number of reduce tasks is configurable through an optional second argument. |

| aggregateByKey(zeroValue)(seqOp, combOp, [numTasks]) | When called on a dataset of (K, V) pairs, returns a dataset of (K, U) pairs where the values for each key are aggregated using the given combine functions and a neutral "zero" value. Allows an aggregated value type that is different than the input value type, while avoiding unnecessary allocations. Like in groupByKey, the number of reduce tasks is configurable through an optional second argument. |

| sortByKey([ascending], [numTasks]) | When called on a dataset of (K, V) pairs where K implements Ordered, returns a dataset of (K, V) pairs sorted by keys in ascending or descending order, as specified in the boolean ascending argument. |

| join(otherDataset, [numTasks]) | When called on datasets of type (K, V) and (K, W), returns a dataset of (K, (V, W)) pairs with all pairs of elements for each key. Outer joins are supported through leftOuterJoin, rightOuterJoin, and fullOuterJoin. |

| cogroup(otherDataset, [numTasks]) | When called on datasets of type (K, V) and (K, W), returns a dataset of (K, (Iterable<V>, Iterable<W>)) tuples. This operation is also called groupWith. |

| cartesian(otherDataset) | When called on datasets of types T and U, returns a dataset of (T, U) pairs (all pairs of elements). |

| pipe(command, [envVars]) | Pipe each partition of the RDD through a shell command, e.g. a Perl or bash script. RDD elements are written to the process's stdin and lines output to its stdout are returned as an RDD of strings. |

| coalesce(numPartitions) | Decrease the number of partitions in the RDD to numPartitions. Useful for running operations more efficiently after filtering down a large dataset. |

| repartition(numPartitions) | Reshuffle the data in the RDD randomly to create either more or fewer partitions and balance it across them. This always shuffles all data over the network. |

| repartitionAndSortWithinPartitions(partitioner) | Repartition the RDD according to the given partitioner and, within each resulting partition, sort records by their keys. This is more efficient than calling |

Actions

The following table lists some of the common actions supported by Spark. Refer to the RDD API doc (Scala, Java, Python, R)

and pair RDD functions doc (Scala, Java) for details.

Action | Meaning |

|---|---|

| reduce(func) | Aggregate the elements of the dataset using a function func (which takes two arguments and returns one). The function should be commutative and associative so that it can be computed correctly in parallel. |

| collect() | Return all the elements of the dataset as an array at the driver program. This is usually useful after a filter or other operation that returns a sufficiently small subset of the data. |

| count() | Return the number of elements in the dataset. |

| first() | Return the first element of the dataset (similar to take(1)). |

| take(n) | Return an array with the first n elements of the dataset. |

| takeSample(withReplacement, num, [seed]) | Return an array with a random sample of num elements of the dataset, with or without replacement, optionally pre-specifying a random number generator seed. |

| takeOrdered(n, [ordering]) | Return the first n elements of the RDD using either their natural order or a custom comparator. |

| saveAsTextFile(path) | Write the elements of the dataset as a text file (or set of text files) in a given directory in the local filesystem, HDFS or any other Hadoop-supported file system. Spark will call toString on each element to convert it to a line of text in the file. |

| saveAsSequenceFile(path) (Java and Scala) | Write the elements of the dataset as a Hadoop SequenceFile in a given path in the local filesystem, HDFS or any other Hadoop-supported file system. This is available on RDDs of key-value pairs that implement Hadoop's Writable interface. In Scala, it is also available on types that are implicitly convertible to Writable (Spark includes conversions for basic types like Int, Double, String, etc). |

| saveAsObjectFile(path) (Java and Scala) | Write the elements of the dataset in a simple format using Java serialization, which can then be loaded usingSparkContext.objectFile(). |

| countByKey() | Only available on RDDs of type (K, V). Returns a hashmap of (K, Int) pairs with the count of each key. |

| foreach(func) | Run a function func on each element of the dataset. This is usually done for side effects such as updating an Accumulator or interacting with external storage systems. Note: modifying variables other than Accumulators outside of the foreach() may result in undefined behavior. See Understanding closures for more details. |

The Spark RDD API also exposes asynchronous versions of some actions, like foreachAsync for foreach, which immediately return a FutureAction to the caller instead of blocking on completion of the action. This can be used to manage or wait for the asynchronous execution of the action.

첨부 파일(transformations_actions_datasetupdated0322.xlsx)

다운로드 해서 보시면 좀 더 편하게 볼 수 있습니다.

| dataset api | import org.apache.spark.SparkContext import org.apache.spark.SparkConf import org.apache.spark.sql.SparkSession val spark = SparkSession.builder.appName("mapExample").master("local").getOrCreate() import spark.implicits._ val sc = spark.sparkContext | |||||

| return type | method | description | example | result | RDD 영향도 | |

| Dataset<Row> | agg(Column expr, Column... exprs) | Aggregates on the entire Dataset without groups. Col 이 list 로 만들어질경우 합쳐주는 연산? | import org.apache.spark.sql.functions._ val ds = Seq((1, 1, 2L), (1, 2, 3L), (1, 3, 4L), (2, 1, 5L),(2,2,5L), (4,4,5L), (5,5,5L), 1,2,3L),(9,9,9L)).toDS() ds.groupBy("_1").agg(collect_list("_2") as "list").show(false) | +---+---------+ |_1 |list | +---+---------+ |1 |[1, 2, 3]| |2 |[1] | +---+---------+ | ||

| Dataset<Row> | agg(Column expr, scala.collection.Seq<Column> exprs) | Aggregates on the entire Dataset without groups. | ds.groupBy("_1").agg(count("*"), sum("_2").alias("summing"), sum("_3")).show(false) | +---+--------+-------+-------+ |_1 |count(1)|sum(_2)|sum(_3)| +---+--------+-------+-------+ |1 |3 |6 |9 | |2 |1 |1 |5 | +---+--------+-------+-------+ | ||

| Dataset<Row> | agg(scala.collection.immutable.Map<String,String> exprs) | (Scala-specific) Aggregates on the entire Dataset without groups. | ||||

| Dataset<Row> | agg(scala.Tuple2<String,String> aggExpr, scala.collection.Seq<scala.Tuple2<String,String>> aggExprs) | (Scala-specific) Aggregates on the entire Dataset without groups. | ||||

| Dataset<T> | alias(String alias) | Returns a new Dataset with an alias set. | ds.groupBy("_1").agg(count("*"), sum("_2").alias("summing"), sum("_3")).show(false) | '+---+--------+-------+-------+ |_1 |count(1)|summing|sum(_3)| +---+--------+-------+-------+ |1 |3 |6 |9 | |2 |1 |1 |5 | +---+--------+-------+-------+ | ||

| Dataset<T> | alias(scala.Symbol alias) | |||||

| (Scala-specific) Returns a new Dataset with an alias set. | ||||||

| Column | apply(String colName) | Selects column based on the column name and return it as a Column. | ds.apply("_1").toString() | |||

| Dataset<T> | as(String alias) | Returns a new Dataset with an alias set. | ||||

| Dataset<T> | as(scala.Symbol alias) | (Scala-specific) Returns a new Dataset with an alias set. | ||||

| Dataset<T> | cache() | Persist this Dataset with the default storage level (MEMORY_AND_DISK). | ds.cache() | |||

| Dataset<T> | checkpoint() | Eagerly checkpoint a Dataset and return the new Dataset. | ||||

| Dataset<T> | checkpoint(boolean eager) | Returns a checkpointed version of this Dataset. | ||||

| scala.reflect.ClassTag<T> | classTag() | |||||

| Dataset<T> | coalesce(int numPartitions) | Returns a new Dataset that has exactly numPartitions partitions. | ||||

| Column | col(String colName) | Selects column based on the column name and return it as a Column. | ||||

| Object | collect() | Returns an array that contains all of Rows in this Dataset. | ds.select("_1").collect() | |||

| java.util.List<T> | collectAsList() | Returns a Java list that contains all of Rows in this Dataset. | ||||

| String[] | columns() | Returns all column names as an array. | ||||

| long | count() | Returns the number of rows in the Dataset. | ||||

| void | createGlobalTempView(String viewName) | Creates a global temporary view using the given name. | Global Temporary View는 모든 세션에서 공유 가능하며, Spark 어플리케이션이 종료되기 전까지 살아있게 됩니다. 제 경우 Master 노드의 하드디스크에 저장 | |||

| void | createOrReplaceTempView(String viewName) | Creates a local temporary view using the given name. | ||||

| void | createTempView(String viewName) | Creates a local temporary view using the given name. | ||||

| Dataset<Row> | crossJoin(Dataset<?> right) | Explicit cartesian join with another DataFrame. | ||||

| RelationalGroupedDataset | cube(Column... cols) | Create a multi-dimensional cube for the current Dataset using the specified columns, so we can run aggregation on them. | ||||

| RelationalGroupedDataset | cube(scala.collection.Seq<Column> cols) | Create a multi-dimensional cube for the current Dataset using the specified columns, so we can run aggregation on them. | cube동작 | |||

| RelationalGroupedDataset | cube(String col1, scala.collection.Seq<String> cols) | Create a multi-dimensional cube for the current Dataset using the specified columns, so we can run aggregation on them. | ||||

| RelationalGroupedDataset | cube(String col1, String... cols) | Create a multi-dimensional cube for the current Dataset using the specified columns, so we can run aggregation on them. | ||||

| Dataset<Row> | describe(scala.collection.Seq<String> cols) | Computes statistics for numeric and string columns, including count, mean, stddev, min, and max. | ds.discribe.sho | 간단한평균 | ||

| Dataset<Row> | describe(String... cols) | Computes statistics for numeric and string columns, including count, mean, stddev, min, and max. | ||||

| Dataset<T> | distinct() | Returns a new Dataset that contains only the unique rows from this Dataset. | 중복제거 (완전히 일치하는 row 끼리만) | |||

| Dataset<Row> | drop(Column col) | Returns a new Dataset with a column dropped. | ds.drop("_2").show | +---+---+ | _1| _3| +---+---+ | 1| 2| | 1| 3| | 1| 4| | 2| 5| +---+---+ | ||

| Dataset<Row> | drop(scala.collection.Seq<String> colNames) | Returns a new Dataset with columns dropped. | ||||

| Dataset<Row> | drop(String... colNames) | Returns a new Dataset with columns dropped. | ||||

| Dataset<Row> | drop(String colName) | Returns a new Dataset with a column dropped. | ||||

| Dataset<T> | dropDuplicates() | Returns a new Dataset that contains only the unique rows from this Dataset. | ||||

| Dataset<T> | dropDuplicates(scala.collection.Seq<String> colNames) | (Scala-specific) Returns a new Dataset with duplicate rows removed, considering only the subset of columns. | ds.dropDuplicates.show | +---+---+ | _1| _3| +---+---+ | 1| 2| | 1| 3| | 1| 4| | 2| 5| +---+---+ | ||

| Dataset<T> | dropDuplicates(String[] colNames) | Returns a new Dataset with duplicate rows removed, considering only the subset of columns. | ds.dropDuplicates("_1").show | +---+---+---+ | _1| _2| _3| +---+---+---+ | 1| 1| 2| | 2| 1| 5| +---+---+---+ | ||

| Dataset<T> | dropDuplicates(String col1, scala.collection.Seq<String> cols) | Returns a new Dataset with duplicate rows removed, considering only the subset of columns. | ||||

| Dataset<T> | dropDuplicates(String col1, String... cols) | Returns a new Dataset with duplicate rows removed, considering only the subset of columns. | ||||

| scala.Tuple2<String,String>[] | dtypes() | Returns all column names and their data types as an array. | ds.dtypes | Array[(String, String)] = Array((_1,IntegerType), (_2,IntegerType), (_3,LongType)) | ||

| Dataset<T> | except(Dataset<T> other) | Returns a new Dataset containing rows in this Dataset but not in another Dataset. | ||||

| void | explain() | Prints the physical plan to the console for debugging purposes. | ||||

| void | explain(boolean extended) | Prints the plans (logical and physical) to the console for debugging purposes. | ||||

| Dataset<T> | filter(Column condition) | Filters rows using the given condition. | ||||

| T | first() | Returns the first row. | ||||

| void | foreach(ForeachFunction<T> func) | (Java-specific) Runs func on each element of this Dataset. | ||||

| void | foreach(scala.Function1<T,scala.runtime.BoxedUnit> f) | Applies a function f to all rows. | ||||

| void | foreachPartition(ForeachPartitionFunction<T> func) | (Java-specific) Runs func on each partition of this Dataset. | ||||

| void | foreachPartition(scala.Function1<scala.collection.Iterator<T>,scala.runtime.BoxedUnit> f) | Applies a function f to each partition of this Dataset. | ||||

| RelationalGroupedDataset | groupBy(Column... cols) | Groups the Dataset using the specified columns, so we can run aggregation on them. | ||||

| RelationalGroupedDataset | groupBy(scala.collection.Seq<Column> cols) | Groups the Dataset using the specified columns, so we can run aggregation on them. | ||||

| RelationalGroupedDataset | groupBy(String col1, scala.collection.Seq<String> cols) | Groups the Dataset using the specified columns, so that we can run aggregation on them. | ||||

| RelationalGroupedDataset | groupBy(String col1, String... cols) | Groups the Dataset using the specified columns, so that we can run aggregation on them. | ||||

| T | head() | Returns the first row. | ||||

| Object | head(int n) | Returns the first n rows. | ||||

| String[] | inputFiles() | Returns a best-effort snapshot of the files that compose this Dataset. | in.inputFiles.foreach(println) | file:///D:/scala/test2/input.txt | 인풋파일 브리핑 | |

| Dataset<T> | intersect(Dataset<T> other) | Returns a new Dataset containing rows only in both this Dataset and another Dataset. | ||||

| boolean | isLocal() | Returns true if the collect and take methods can be run locally (without any Spark executors). | ds.isLocal ds.cube(("_1"),("_2")).sum().isLocal | true false | local 로 도는지 아닌지 확인가능 | |

| boolean | isStreaming() | Returns true if this Dataset contains one or more sources that continuously return data as it arrives. | 스트리밍형식 | 용도 잘 모르겠음 | ||

| JavaRDD<T> | javaRDD() | Returns the content of the Dataset as a JavaRDD of Ts. | ||||

| Dataset<Row> | join(Dataset<?> right) | Join with another DataFrame. | ||||

| Dataset<Row> | join(Dataset<?> right, Column joinExprs) | Inner join with another DataFrame, using the given join expression. | val ds = Seq((1, 1, "A"), (1, 2, "B"), (1, 3, "C"), (2, 1, "D")).toDS() val ds2 = Seq(("A",1), ("B",), (1, 3, 4L), (2, 1, 5L)).toDS() | |||

| Dataset<Row> | join(Dataset<?> right, Column joinExprs, String joinType) | Join with another DataFrame, using the given join expression. | //inner employees .join(departments, "DepartmentID") .show() //outer employees .join(departments, Seq("DepartmentID"), "left_outer") .show() //inner, outer, left_outer, right_outer, leftsemi //carsetian employees .join(departments) .show(10) //conditional (nonequal) orders .join(products, $"product" === $"name" && $"date" >= $"startDate" && $"date" <= $"endDate") .show() | |||

| Dataset<Row> | join(Dataset<?> right, scala.collection.Seq<String> usingColumns) | Inner equi-join with another DataFrame using the given columns. | ||||

| Dataset<Row> | join(Dataset<?> right, scala.collection.Seq<String> usingColumns, String joinType) | Equi-join with another DataFrame using the given columns. | ||||

| Dataset<Row> | join(Dataset<?> right, String usingColumn) | Inner equi-join with another DataFrame using the given column. | ||||

| Dataset<T> | limit(int n) | Returns a new Dataset by taking the first n rows. | ||||

| DataFrameNaFunctions | na() | Returns a DataFrameNaFunctions for working with missing data. | df.show() // +-----+----+ // | name| age| // +-----+----+ // |alice| 35| // | bob|null| // | | 24| // +-----+----+ scala> df.na.fill(10.0,Seq("age")) // res4: org.apache.spark.sql.DataFrame = [name: string, age: int] // scala> df.na.fill(10.0,Seq("age")).show // +-----+---+ // | name|age| // +-----+---+ // |alice| 35| // | bob| 10| // | | 24| // +-----+---+ scala> df.na.replace("age", Map(35 -> 61,24 -> 12))).show() // +-----+----+ // | name| age| // +-----+----+ // |alice| 61| // | bob|null| // | | 12| // +-----+----+ | update 및 replace 가능? 써도되나? | ||

| static Dataset<Row> | ofRows(SparkSession sparkSession, org.apache.spark.sql.catalyst.plans.logical.LogicalPlan logicalPlan) | |||||

| Dataset<T> | orderBy(Column... sortExprs) | Returns a new Dataset sorted by the given expressions. | OrderBy is just an alias for the sort function | |||

| Dataset<T> | orderBy(scala.collection.Seq<Column> sortExprs) | Returns a new Dataset sorted by the given expressions. | OrderBy is just an alias for the sort function | |||

| Dataset<T> | orderBy(String sortCol, scala.collection.Seq<String> sortCols) | Returns a new Dataset sorted by the given expressions. | OrderBy is just an alias for the sort function | |||

| Dataset<T> | orderBy(String sortCol, String... sortCols) | Returns a new Dataset sorted by the given expressions. | OrderBy is just an alias for the sort function | |||

| Dataset<T> | persist() | Persist this Dataset with the default storage level (MEMORY_AND_DISK). | ||||

| Dataset<T> | persist(StorageLevel newLevel) | Persist this Dataset with the given storage level. | ||||

| void | printSchema() | Prints the schema to the console in a nice tree format. | ds.printSchema() | root |-- _1: integer (nullable = false) |-- _2: integer (nullable = false) |-- _3: long (nullable = false) | ||

| org.apache.spark.sql.execution.QueryExecution | queryExecution() | ds.queryexecution | == Parsed Logical Plan == LocalRelation [_1#3, _2#4, _3#5L] == Analyzed Logical Plan == _1: int, _2: int, _3: bigint LocalRelation [_1#3, _2#4, _3#5L] == Optimized Logical Plan == LocalRelation [_1#3, _2#4, _3#5L] == Physical Plan == LocalTableScan [_1#3, _2#4, _3#5L] | 용도 잘 모르겠음 | ||

| Dataset<T>[] | randomSplit(double[] weights) | Randomly splits this Dataset with the provided weights. | val ds = Seq((1, 1, 2L), (1, 2, 3L), (1, 3, 4L), (2, 1, 5L),(2,2,5L), (4,4,5L), (5,5,5L), (1,2,3L),(9,9,9L)).toDS() ds.randomSplit(Array(0.3,0.2,0.5),5).foreach(t=>t.show) | +---+---+---+ | _1| _2| _3| +---+---+---+ | 1| 1| 2| | 5| 5| 5| | 9| 9| 9| +---+---+---+ +---+---+---+ | _1| _2| _3| +---+---+---+ | 1| 3| 4| | 2| 1| 5| +---+---+---+ +---+---+---+ | _1| _2| _3| +---+---+---+ | 1| 2| 3| | 1| 2| 3| | 2| 2| 5| | 4| 4| 5| +---+---+---+ | ||

| Dataset<T>[] | randomSplit(double[] weights, long seed) | Randomly splits this Dataset with the provided weights. | ||||

| java.util.List<Dataset<T>> | randomSplitAsList(double[] weights, long seed) | Returns a Java list that contains randomly split Dataset with the provided weights. | ||||

| RDD<T> | rdd() | Represents the content of the Dataset as an RDD of T. | ||||

| Dataset<T> | repartition(Column... partitionExprs) | Returns a new Dataset partitioned by the given partitioning expressions, using spark.sql.shuffle.partitions as number of partitions. | in rdd 설명 | |||

| Dataset<T> | repartition(int numPartitions) | Returns a new Dataset that has exactly numPartitions partitions. | in rdd 설명 | |||

| Dataset<T> | repartition(int numPartitions, Column... partitionExprs) | Returns a new Dataset partitioned by the given partitioning expressions into numPartitions. | ||||

| Dataset<T> | repartition(int numPartitions, scala.collection.Seq<Column> partitionExprs) | Returns a new Dataset partitioned by the given partitioning expressions into numPartitions. | ||||

| Dataset<T> | repartition(scala.collection.Seq<Column> partitionExprs) | Returns a new Dataset partitioned by the given partitioning expressions, using spark.sql.shuffle.partitions as number of partitions. | ||||

| RelationalGroupedDataset | rollup(Column... cols) | Create a multi-dimensional rollup for the current Dataset using the specified columns, so we can run aggregation on them. | val ds = Seq((1, 1, 2L), (1, 2, 3L), (1, 3, 4L), (2, 1, 5L),(2,2,5L), (4,4,5L), (5,5,5L), (1,2,3L),(9,9,9L)).toDS() ds.show ds.rollup("_1").count.show ds.rollup("_1").agg("_2","_3").show ds.rollup("_1").agg(sum("_2")).show | +---+---+---+ | _1| _2| _3| +---+---+---+ | 1| 1| 2| | 1| 2| 3| | 1| 3| 4| | 2| 1| 5| | 2| 2| 5| | 4| 4| 5| | 5| 5| 5| | 1| 2| 3| | 9| 9| 9| +---+---+---+ +----+-----+ | _1|count| +----+-----+ | 1| 4| | 4| 1| |null| 9| | 2| 2| | 5| 1| | 9| 1| +----+-----+ +----+-------+ | _1|sum(_2)| +----+-------+ | 1| 8| | 4| 4| |null| 29| | 2| 3| | 5| 5| | 9| 9| +----+-------+ | ||

| RelationalGroupedDataset | rollup(scala.collection.Seq<Column> cols) | Create a multi-dimensional rollup for the current Dataset using the specified columns, so we can run aggregation on them. | ||||

| RelationalGroupedDataset | rollup(String col1, scala.collection.Seq<String> cols) | Create a multi-dimensional rollup for the current Dataset using the specified columns, so we can run aggregation on them. | ||||

| RelationalGroupedDataset | rollup(String col1, String... cols) | Create a multi-dimensional rollup for the current Dataset using the specified columns, so we can run aggregation on them. | ||||

| Dataset<T> | sample(boolean withReplacement, double fraction) | Returns a new Dataset by sampling a fraction of rows, using a random seed. | ||||

| Dataset<T> | sample(boolean withReplacement, double fraction, long seed) | Returns a new Dataset by sampling a fraction of rows, using a user-supplied seed. | ||||

| StructType | schema() | Returns the schema of this Dataset. | ||||

| Dataset<Row> | select(Column... cols) | Selects a set of column based expressions. | ||||

| Dataset<Row> | select(scala.collection.Seq<Column> cols) | Selects a set of column based expressions. | ||||

| Dataset<Row> | select(String col, scala.collection.Seq<String> cols) | Selects a set of columns. | ||||

| Dataset<Row> | select(String col, String... cols) | Selects a set of columns. | ||||

| Dataset<Row> | selectExpr(scala.collection.Seq<String> exprs) | Selects a set of SQL expressions. | ||||

| Dataset<Row> | selectExpr(String... exprs) | Selects a set of SQL expressions. | ||||

| void | show() | Displays the top 20 rows of Dataset in a tabular form. | ||||

| void | show(boolean truncate) | Displays the top 20 rows of Dataset in a tabular form. | ||||

| void | show(int numRows) | Displays the Dataset in a tabular form. | ||||

| void | show(int numRows, boolean truncate) | Displays the Dataset in a tabular form. | ||||

| void | show(int numRows, int truncate) | Displays the Dataset in a tabular form. | ||||

| Dataset<T> | sort(Column... sortExprs) | Returns a new Dataset sorted by the given expressions. | ds.sort(($"_1".desc)).show | +---+---+---+ | _1| _2| _3| +---+---+---+ | 9| 9| 9| | 5| 5| 5| | 4| 4| 5| | 2| 1| 5| | 2| 2| 5| | 1| 1| 2| | 1| 3| 4| | 1| 2| 3| | 1| 2| 3| +---+---+---+ | ||

| Dataset<T> | sort(scala.collection.Seq<Column> sortExprs) | Returns a new Dataset sorted by the given expressions. | ||||

| Dataset<T> | sort(String sortCol, scala.collection.Seq<String> sortCols) | Returns a new Dataset sorted by the specified column, all in ascending order. | ||||

| Dataset<T> | sort(String sortCol, String... sortCols) | Returns a new Dataset sorted by the specified column, all in ascending order. | ||||

| Dataset<T> | sortWithinPartitions(Column... sortExprs) | Returns a new Dataset with each partition sorted by the given expressions. | ||||

| Dataset<T> | sortWithinPartitions(scala.collection.Seq<Column> sortExprs) | Returns a new Dataset with each partition sorted by the given expressions. | ||||

| Dataset<T> | sortWithinPartitions(String sortCol, scala.collection.Seq<String> sortCols) | Returns a new Dataset with each partition sorted by the given expressions. | ||||

| Dataset<T> | sortWithinPartitions(String sortCol, String... sortCols) | Returns a new Dataset with each partition sorted by the given expressions. | ||||

| SparkSession | sparkSession() | |||||

| SQLContext | sqlContext() | |||||

| DataFrameStatFunctions | stat() | Returns a DataFrameStatFunctions for working statistic functions support. | ??뭐가있을까 | |||

| StorageLevel | storageLevel() | Get the Dataset's current storage level, or StorageLevel.NONE if not persisted. | ||||

| Object | take(int n) | Returns the first n rows in the Dataset. | ||||

| java.util.List<T> | takeAsList(int n) | Returns the first n rows in the Dataset as a list. | ||||

| Dataset<Row> | toDF() | Converts this strongly typed collection of data to generic Dataframe. | ||||

| Dataset<Row> | toDF(scala.collection.Seq<String> colNames) | Converts this strongly typed collection of data to generic DataFrame with columns renamed. | ||||

| Dataset<Row> | toDF(String... colNames) | Converts this strongly typed collection of data to generic DataFrame with columns renamed. | ||||

| JavaRDD<T> | toJavaRDD() | Returns the content of the Dataset as a JavaRDD of Ts. | ||||

| Dataset<String> | toJSON() | Returns the content of the Dataset as a Dataset of JSON strings. | ||||

| java.util.Iterator<T> | toLocalIterator() | Return an iterator that contains all of Rows in this Dataset. | ||||

| String | toString() | |||||

| <U> Dataset<U> | transform(scala.Function1<Dataset<T>,Dataset<U>> t) | Concise syntax for chaining custom transformations. | ||||

| Dataset<T> | union(Dataset<T> other) | Returns a new Dataset containing union of rows in this Dataset and another Dataset. | ||||

| Dataset<T> | unpersist() | Mark the Dataset as non-persistent, and remove all blocks for it from memory and disk. | ||||

| Dataset<T> | unpersist(boolean blocking) | Mark the Dataset as non-persistent, and remove all blocks for it from memory and disk. | ||||

| Dataset<T> | where(Column condition) | Filters rows using the given condition. | ||||

| Dataset<T> | where(String conditionExpr) | Filters rows using the given SQL expression. | ||||

| Dataset<Row> | withColumn(String colName, Column col) | Returns a new Dataset by adding a column or replacing the existing column that has the same name. | ||||

| Dataset<Row> | withColumnRenamed(String existingName, String newName) | Returns a new Dataset with a column renamed. | ||||

| DataFrameWriter<T> | write() | Interface for saving the content of the non-streaming Dataset out into external storage. | ||||

| DataStreamWriter<T> | ExperimentalwriteStream() | Interface for saving the content of the streaming Dataset out into external storage. | ||||

'spark,kafka,hadoop ecosystems > apache spark' 카테고리의 다른 글

| spark udf (0) | 2018.11.20 |

|---|---|

| spark tuning 하기 (0) | 2018.11.20 |

| spark memory (0) | 2018.11.20 |

| join with spark (0) | 2018.11.20 |

| spark vs hadoop page rank 실행시간 비교 (0) | 2018.11.20 |